Code

require(tidysynth)Loading required package: tidysynthWarning: package 'tidysynth' was built under R version 4.3.1Code

data("smoking")

smoking %>% dplyr::glimpse()Rows: 1,209

Columns: 7

$ state <chr> "Rhode Island", "Tennessee", "Indiana", "Nevada", "Louisiana…

$ year <dbl> 1970, 1970, 1970, 1970, 1970, 1970, 1970, 1970, 1970, 1970, …

$ cigsale <dbl> 123.9, 99.8, 134.6, 189.5, 115.9, 108.4, 265.7, 93.8, 100.3,…

$ lnincome <dbl> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, …

$ beer <dbl> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, …

$ age15to24 <dbl> 0.1831579, 0.1780438, 0.1765159, 0.1615542, 0.1851852, 0.175…

$ retprice <dbl> 39.3, 39.9, 30.6, 38.9, 34.3, 38.4, 31.4, 37.3, 36.7, 28.8, …Code

smoking_out <-

smoking %>%

# initial the synthetic control object

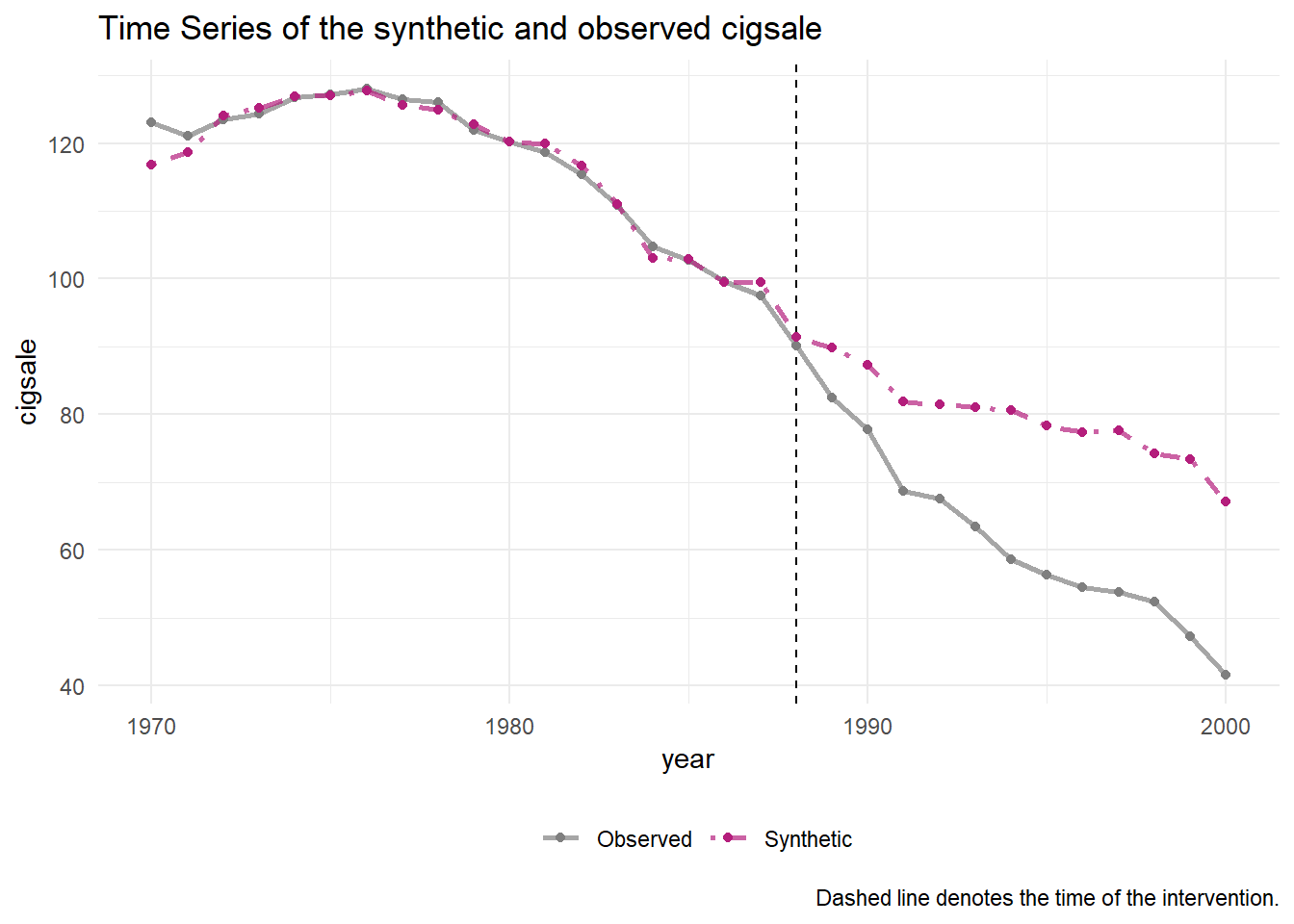

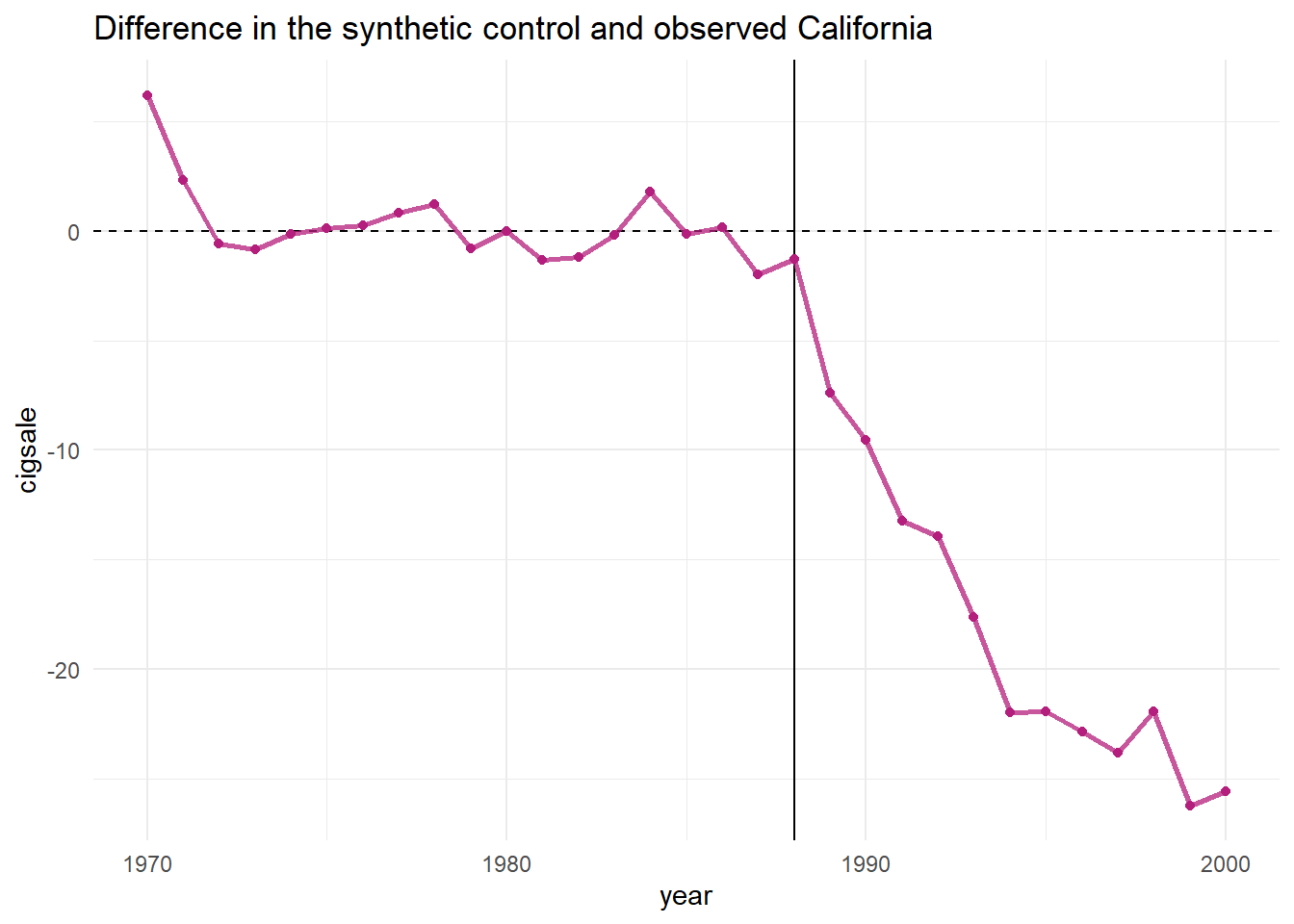

synthetic_control(outcome = cigsale, # outcome

unit = state, # unit index in the panel data

time = year, # time index in the panel data

i_unit = "California", # unit where the intervention occurred

i_time = 1988, # time period when the intervention occurred

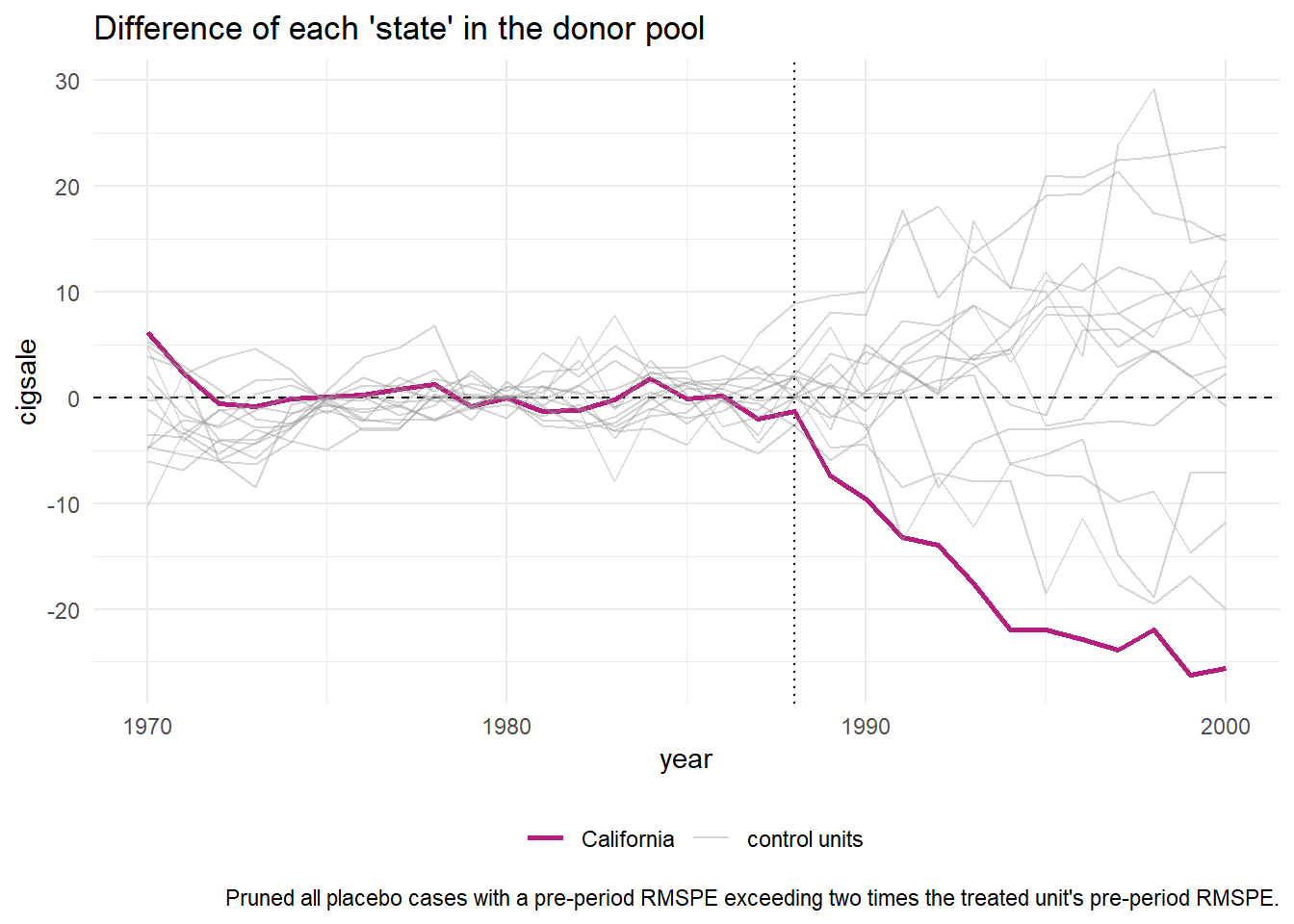

generate_placebos=T # generate placebo synthetic controls (for inference)

) %>%

# Generate the aggregate predictors used to fit the weights

# average log income, retail price of cigarettes, and proportion of the

# population between 15 and 24 years of age from 1980 - 1988

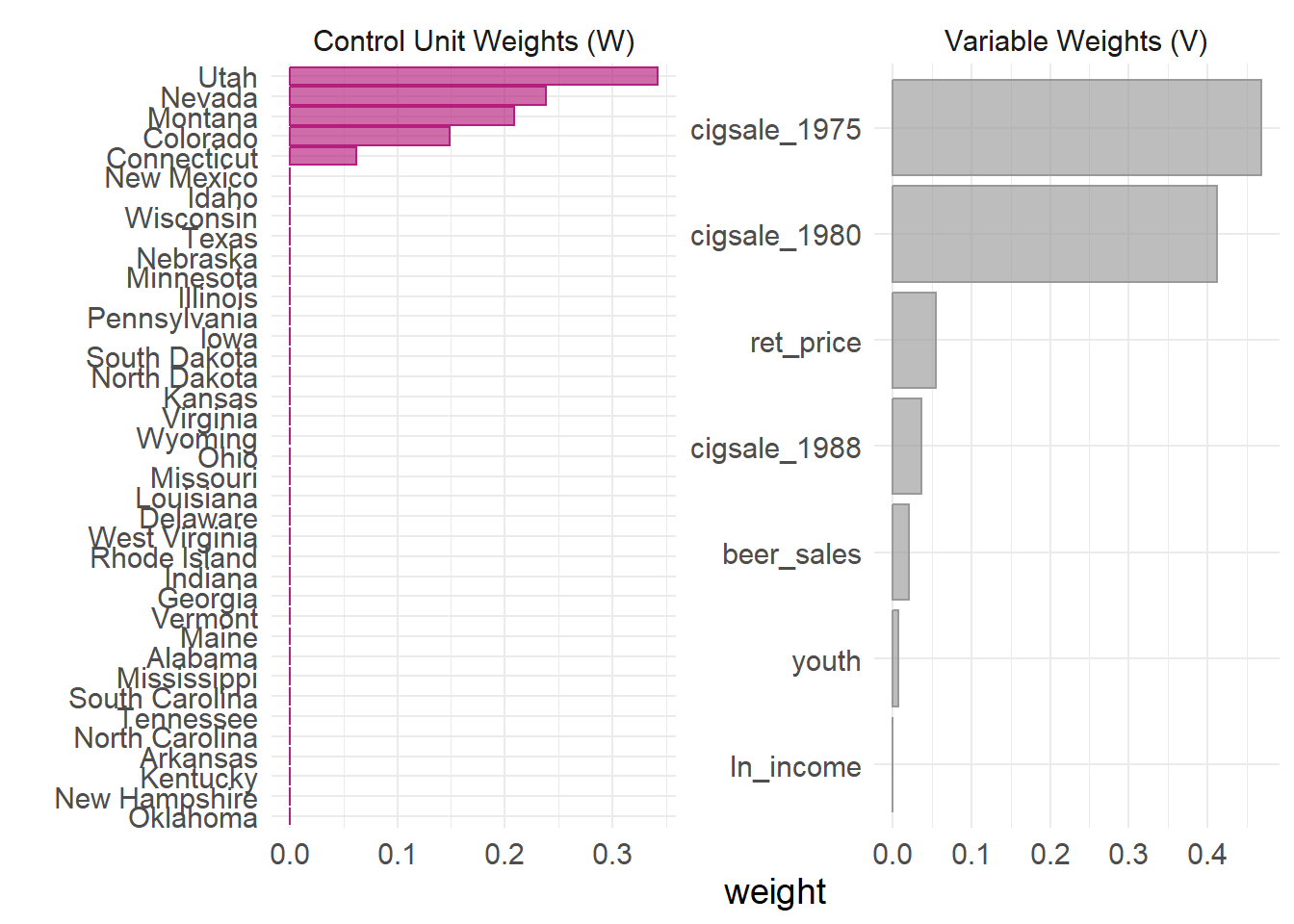

generate_predictor(time_window = 1980:1988,

ln_income = mean(lnincome, na.rm = T),

ret_price = mean(retprice, na.rm = T),

youth = mean(age15to24, na.rm = T)) %>%

# average beer consumption in the donor pool from 1984 - 1988

generate_predictor(time_window = 1984:1988,

beer_sales = mean(beer, na.rm = T)) %>%

# Lagged cigarette sales

generate_predictor(time_window = 1975,

cigsale_1975 = cigsale) %>%

generate_predictor(time_window = 1980,

cigsale_1980 = cigsale) %>%

generate_predictor(time_window = 1988,

cigsale_1988 = cigsale) %>%

# Generate the fitted weights for the synthetic control

generate_weights(optimization_window = 1970:1988, # time to use in the optimization task

margin_ipop = .02,sigf_ipop = 7,bound_ipop = 6 # optimizer options

) %>%

# Generate the synthetic control

generate_control()